July 28, 2018

2186 words, 10 min read

Introduction

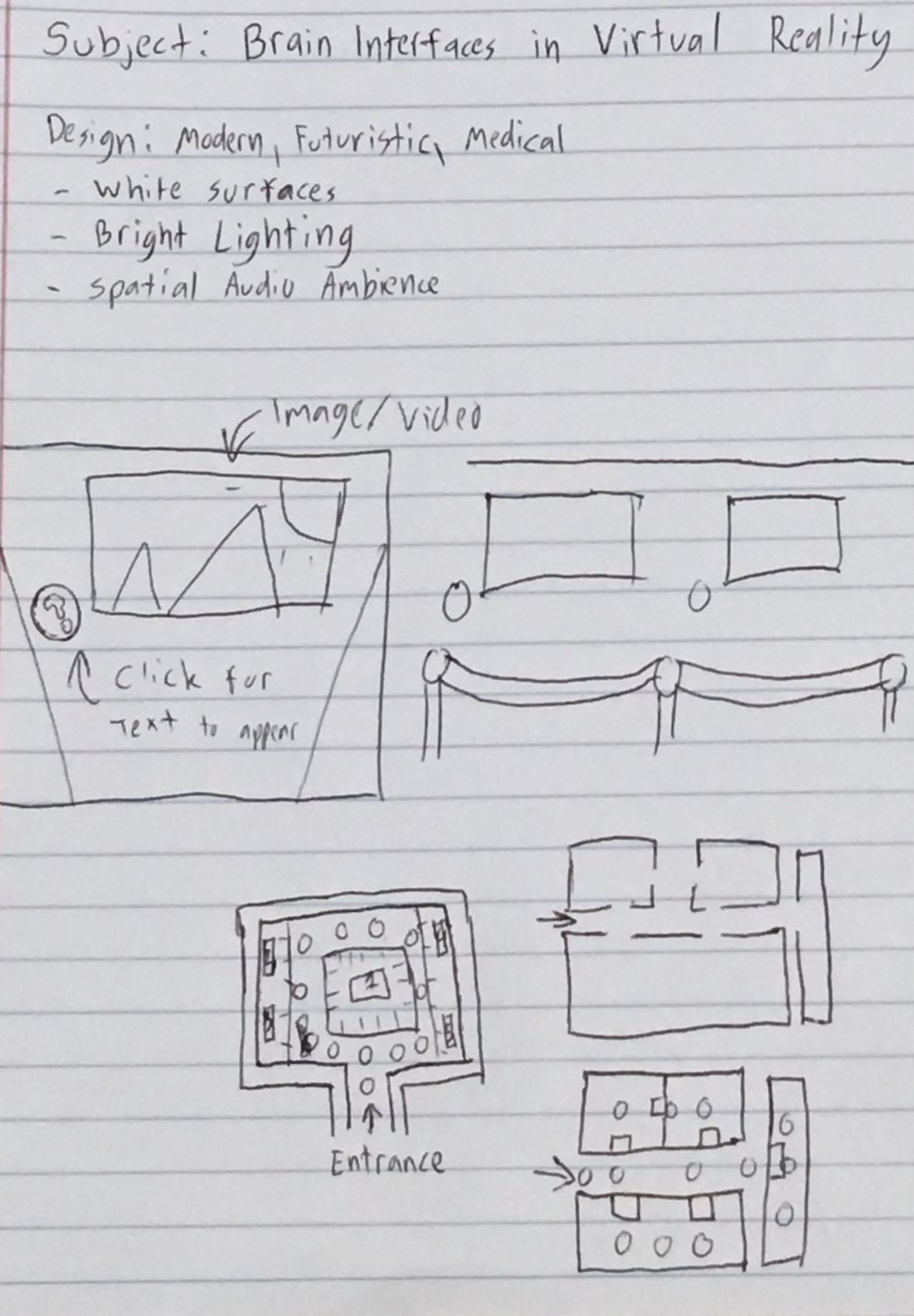

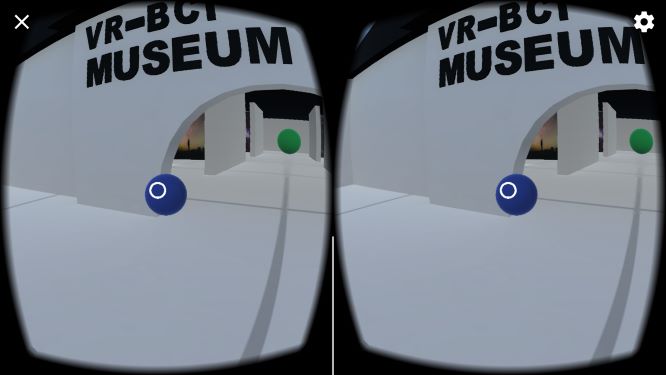

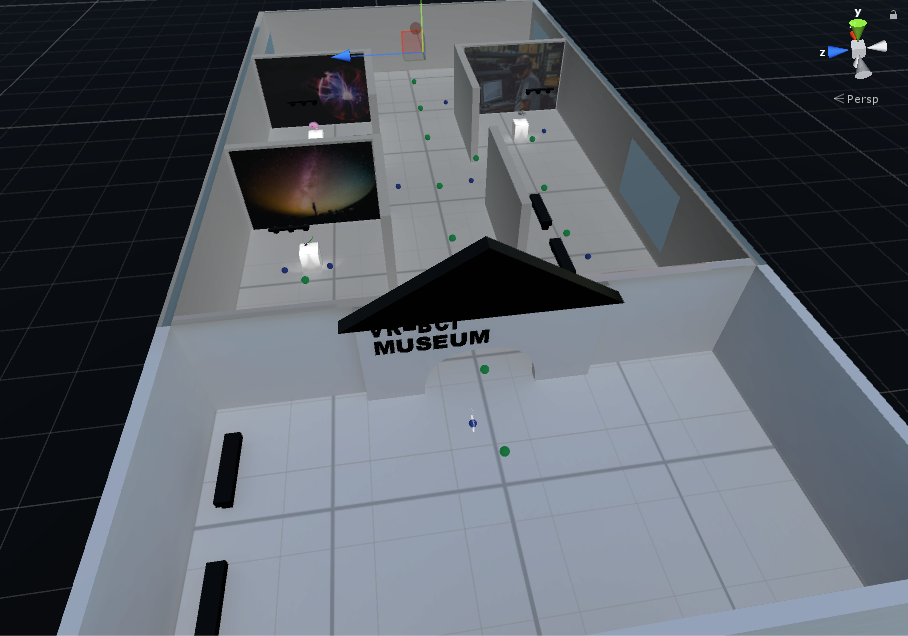

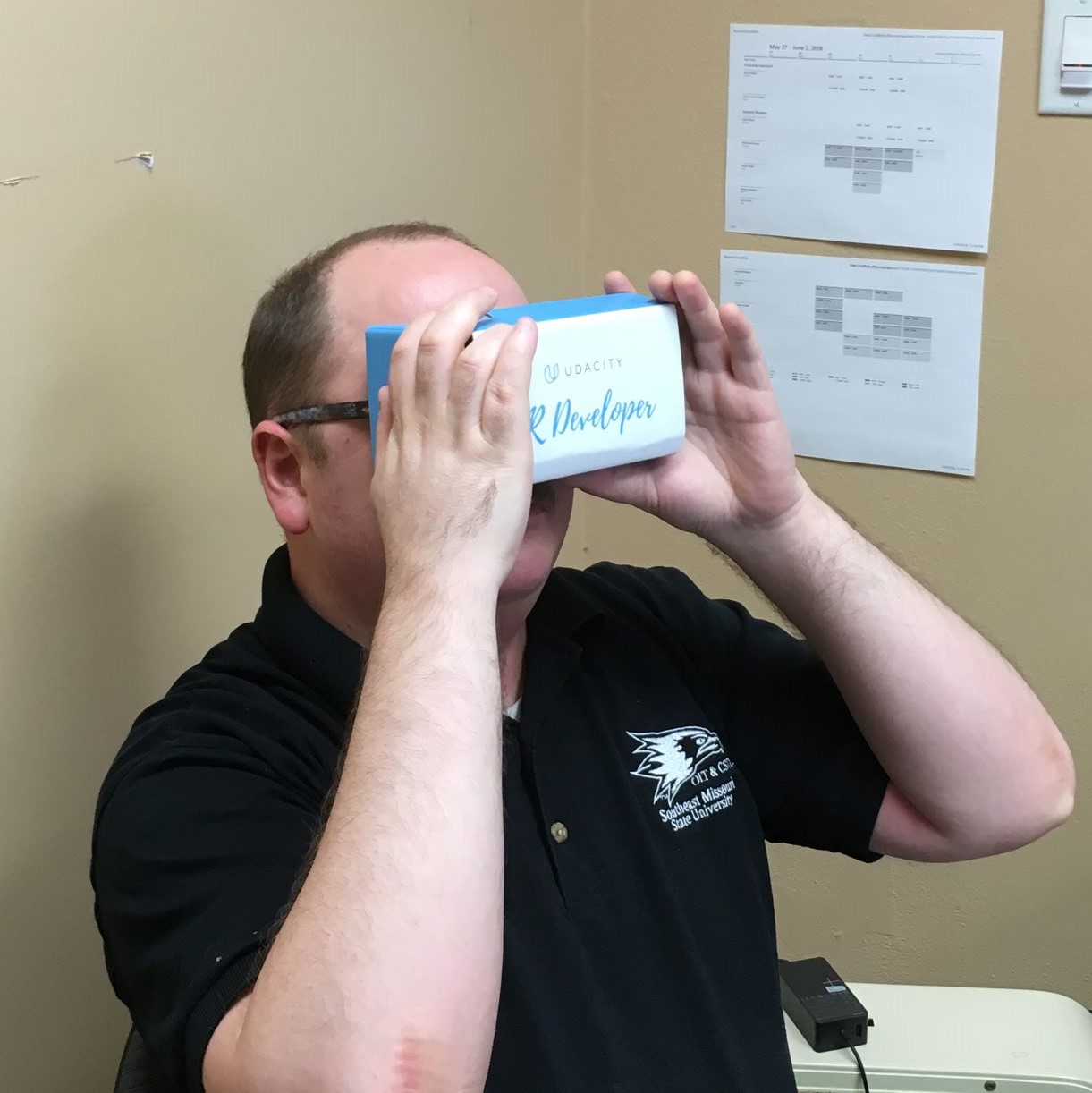

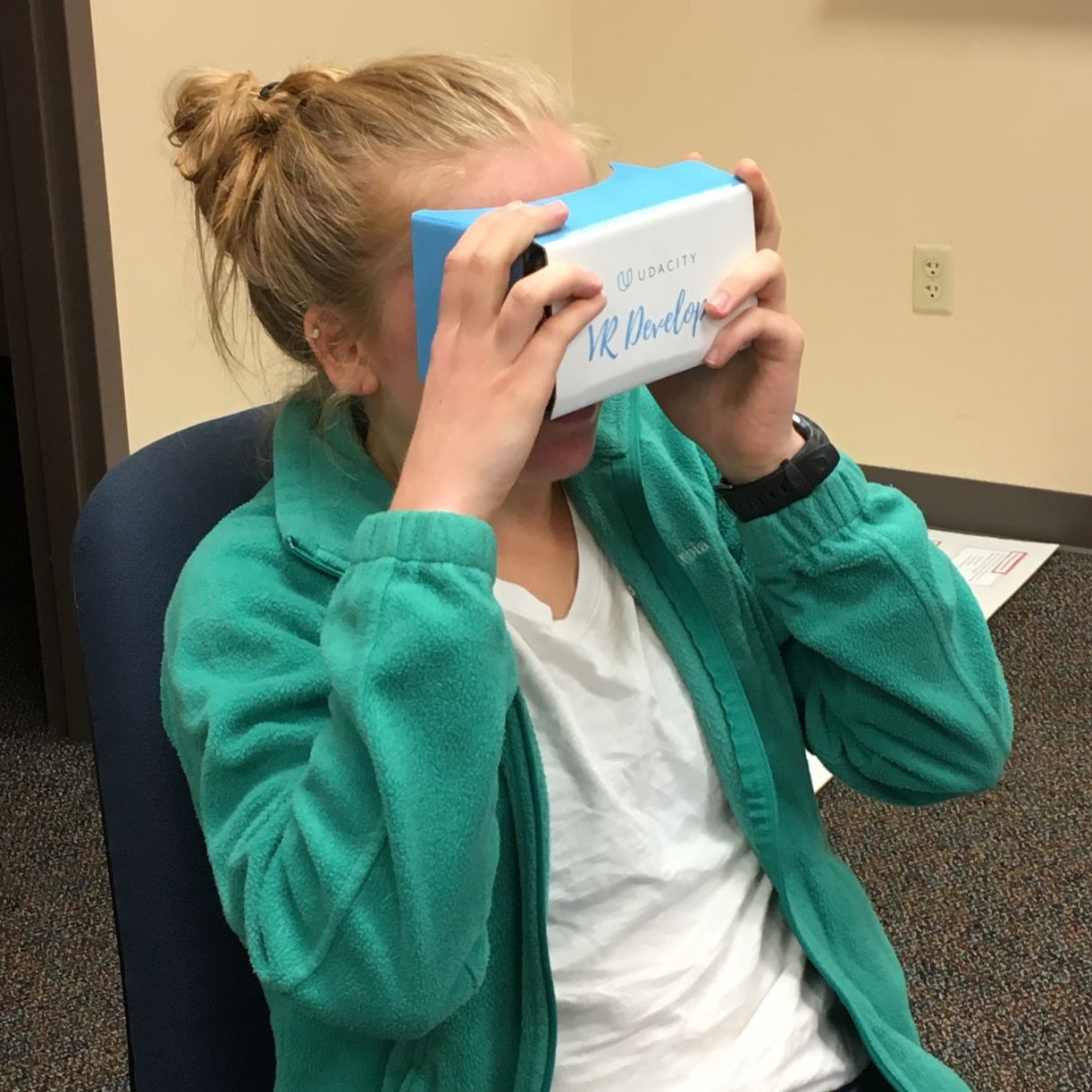

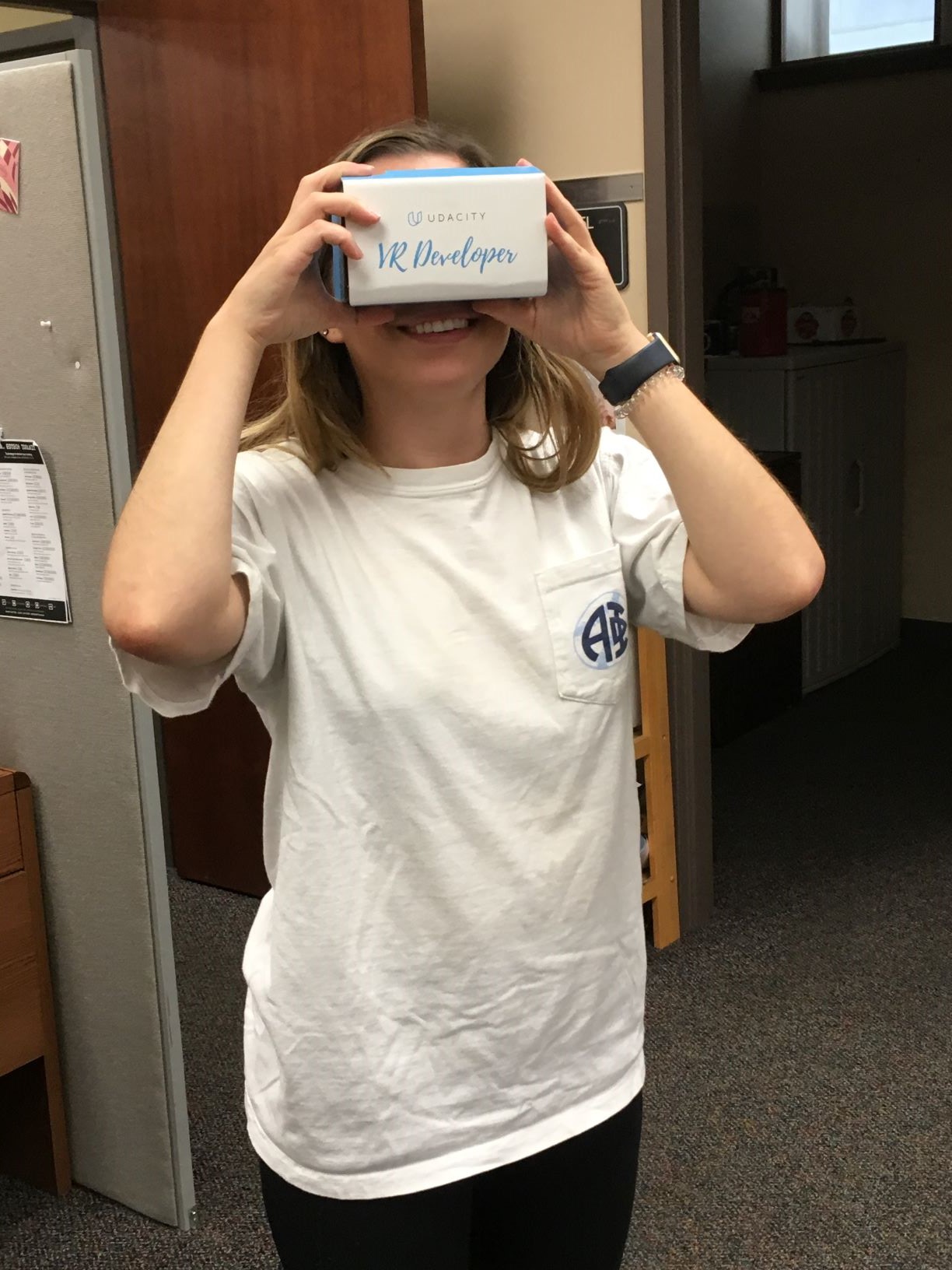

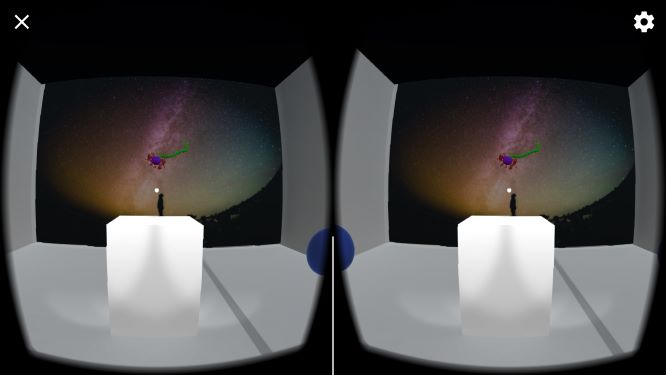

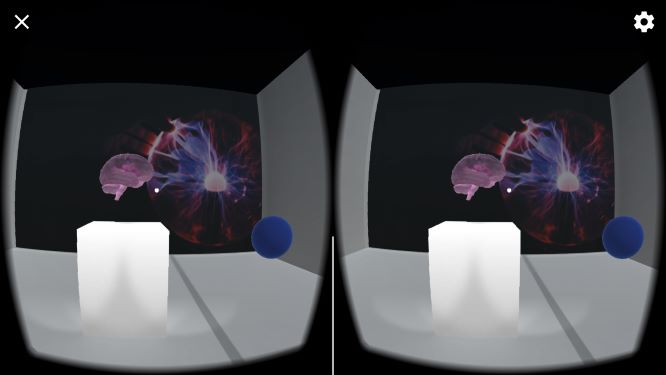

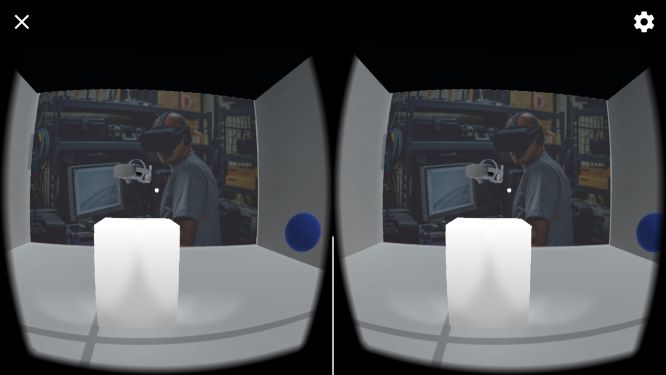

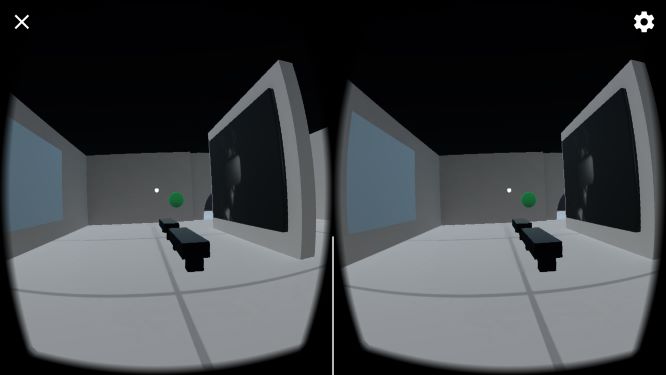

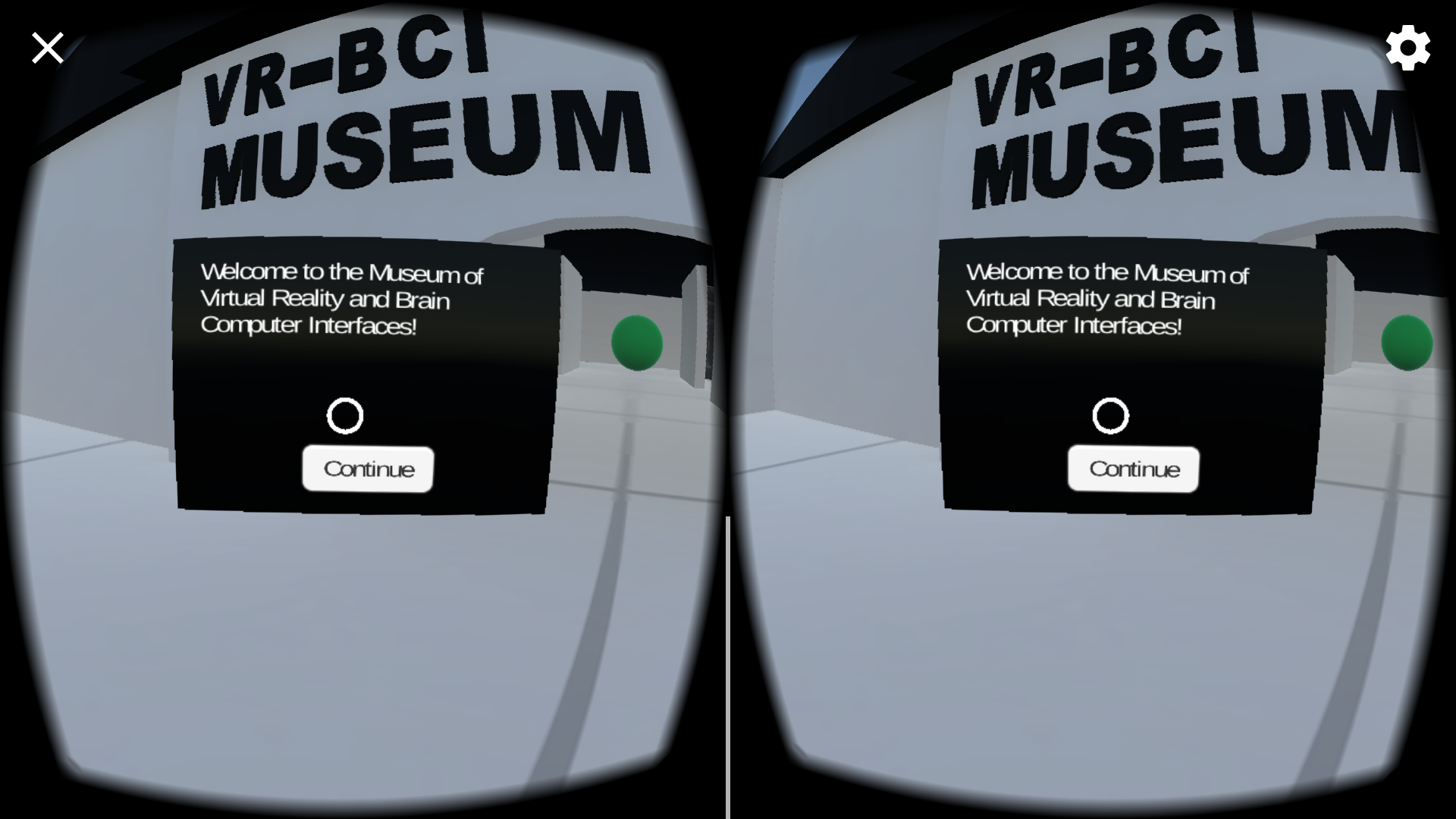

The Night at the Museum project is the fifth project in the Udacity VR Nanodegree track, and the last project of term 2. This project builds on top of everything the student has learned up to this point. The student must build a museum (or something akin to one) that showcases some field or business of virtual reality. As is usual for this track, the entire project is built within the Unity3D engine with the GoogleVR library. The scene created is expected to provide images, videos, or models for viewership; to have a way of movement throughout the VR scene; and to include audio. Documentation and user testing is also a part of the process just as it was in the fourth project.

I managed to complete the project in roughly two weeks. Unlike previous Udacity projects, there are no specific resources handed for this one. This article details the process of this project including all of the challenges I encountered and the end result.